I ran the Deepseek r1:8b model on my local Macbook machine. Tuning it as a basic customer support agent for cross-border payments.

And did the same on ChatGPT UI and OpenAI API and asked a simple question todo a side-by-side comparison.

Looking at Answer Quality, Execution Speed, Running Cost, Data Security, and Setup

Highlights:

Answer Quality

I liked the reasoning aspect of Deepseek R1, which explains the logical details behind the answering.

It helps fine-tune the AI agents and debug them for better responses.

This option is easily collapsible, so we can show/hide it to end users.Using the distilled model is resource-efficient and has limited reasoning abilities. But a full model can give us

- Multi-step problem-solving

- Better reasoning

- Self-verification capabilities

Execution Speed

Running this on my local machine (Apple M1 Pro 16GB), even using the smallest model - R1.b slowed everything on my system.

Development on local 16GB is not optimal for Gen AI training, and inference, so I understand.

So this is easily solvable when we move to cloud hosting on AWS or GCP instances. As well as using Deepseek's bigger models.

Running Cost

It is free. We don’t have to worry about tokens during training and inference.

When deployed for application AI models, this can help us drastically bring down the cost of production and subscription fees.

Data Security

I have not dug much into this part yet, but soon will. Given B2B and Fintech use cases require tight customer data security and privacy, this is critical.

Currently, Orin uses OpenAPI for Fintech AI agents, but anything with Deepseek for customer deployment will need to be scrutinized for many months, passing SOC2 and other compliance standards.

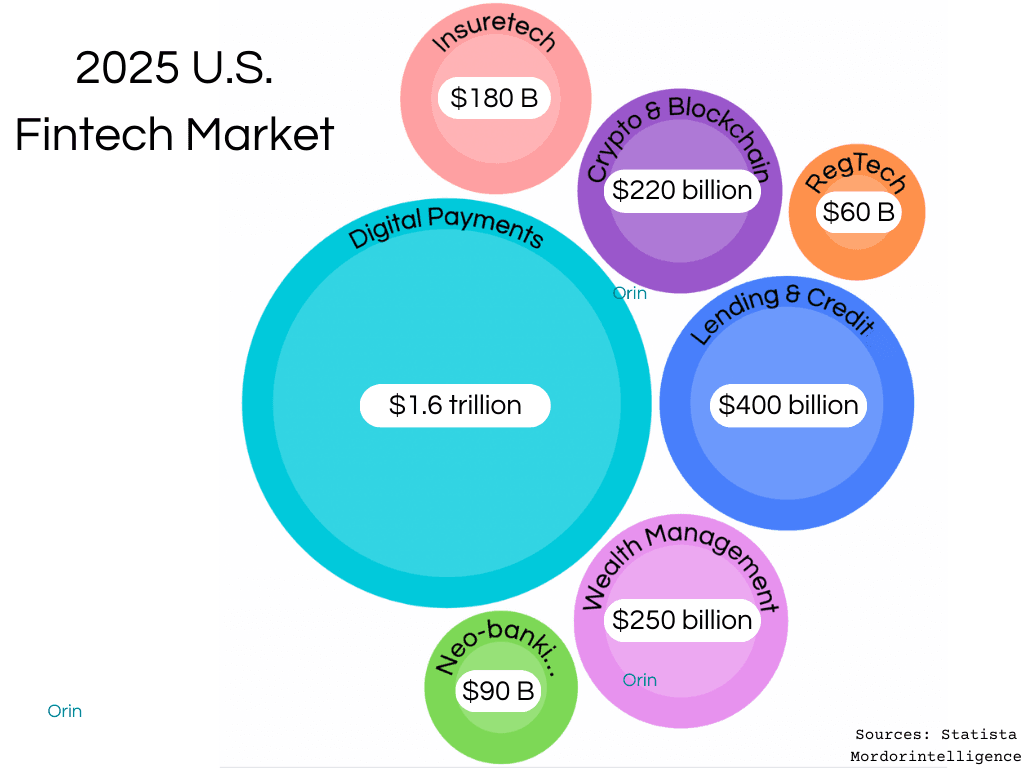

We’re planning to test Deepseek’s base models and distilled models for a few leading Fintech AI use cases: Digital Payments, Credits & Lending, and Cryptocurrency.

Setup

I used OLLAMA as an API provider and Chatbox to easily do questions and answers with Deepseek R1 models. Pulled the latest source from Hugging Face repository -

https://huggingface.co/deepseek-ai/DeepSeek-R1

And checked it out on my local repo and built it. Later connect this base model as provided in Chatbox. Set model name, temperature, and tuning parameters to Deepseek for curated answering.

Next article shows:

Emerging AI Agent architecture for Fintech with different foundational models

References:

Stay tuned as we develop and #buildinpublic.

Article by

Harish Maiya

Co-Founder

Published on

Jan 28, 2025